App-Layer Encryption in AWS

Encrypting application data has traditionally been complicated. It runs the risks of being done incorrectly, not being more secure, negatively impacting performance or availability, and even losing access to data. Square has long had encryption infrastructure in our data centers, but we wanted to make encrypting sensitive data self-service, safe, fast, and easy for Cash App services running in the cloud as well. We have been iterating on this design over the last year and now have several services using it in production powering live Cash App features. We thought this would be a good time to share how we did it.

Cloud encryption key management services such as AWS KMS make encrypting data easy and safe. In addition, since an application can call the KMS to encrypt or decrypt data itself, data can be encrypted or decrypted on-demand as close to its creation or use as possible. This minimizes the window of exposure where an attacker may get access to the data in plaintext. This is more secure than many at-rest encryption schemes where data is transparently decrypted for both authorized and unauthorized users alike.

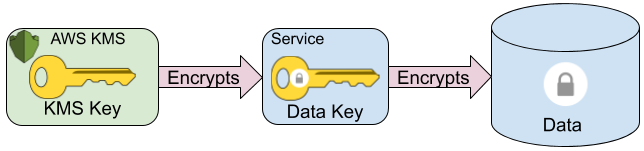

The amount of sensitive data to be encrypted can vary and can also be quite large. The AWS KMS API limits the size of data to be encrypted to 4096 bytes for symmetric keys and even less for asymmetric keys. In addition, even though the API call is over TLS, we don’t necessarily want to expose all of our sensitive data as plaintext to the KMS. A better approach, called envelope encryption improves security compared to the naive approach that sends the entire plaintext to the KMS. In envelope encryption, we create a unique single-use encryption key for each encryption operation and encrypt the data locally using that data key. We then send the data key to the KMS API to be encrypted by a key stored in the KMS. The data key is small; for example, a 256-bit AES key is only 32 bytes long. This also limits exposure because an attacker able to observe communication to the KMS only obtains the data key and not the data encrypted using it. Finally, we store the encrypted data key returned by the call to the KMS alongside the encrypted data.

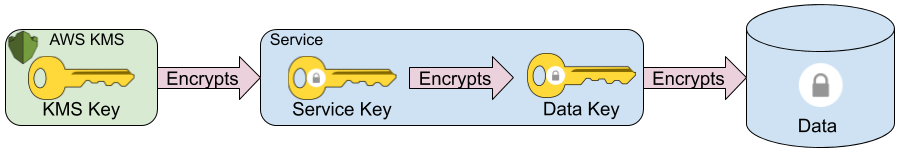

Envelope Encryption with Service Keys

There are some downsides to this approach, however, that we wanted to work around. First, we didn’t want the additional latency and availability risk of a round-trip to the KMS for every data encrypt or decrypt. We also wanted to be able to replicate encrypted data to other regions as-is without our application-layer encryption adding any additional steps or complexity to it. Finally, we also wanted to reduce costs, which would also allow us to apply encryption on a more finely-grained level. In order to do this, we introduced another level of cryptographic key to the basic envelope encryption scheme described above that we call the service key.

In our scheme, individual services are assigned a dedicated KMS key and use them to envelope encrypt the service keys that are managed within the service itself. Our application-layer encryption uses Google’s open-source Tink crypto library and these service key files are stored as serialized Tink keysets. These service key files are read at service startup time and only decrypted in-memory. They are thereafter used to envelope encrypt and decrypt sensitive data in the service’s data store on-demand.

Square Mason Integration

At AWS re:Invent 2019, Cash App’s Geoff Flarity and Jay Estrella

described our cloud platform,

including our dedicated cloud resource provisioning service Square

Mason. We also use Square Mason to create both the KMS and service

keys for our services. For a new service, a Cash App service engineer

first creates a KMS key using the sqm command-line client to Square

Mason with sqm service create-kms-keys:

sqm service create-kms-keys --service-name $SQM_SERVICE

This sends a request to the Square Mason service to provision a new

KMS key for the service and create the appropriate IAM policies and

KMS key policies. The service’s IAM Role only has kms:Decrypt

permissions on its KMS key. This permits the service to decrypt its

service key files, which are envelope encrypted using the service’s

KMS key. The service’s IAM Role does not have kms:Encrypt

permissions on its KMS key to ensure that it can only perform service

key rotations through the Square Mason service.

The Cash App service engineer can now create service keys of varying

types as needed also through sqm service create-service-keys:

sqm service create-service-keys --service-name $SQM_SERVICE \

--environment $SQM_ENV

--output-dir ./service/src/main/resources/secrets \

--key-basename <key_name> \

--key-type <key_type>

This sends a request to the Square Mason service to generate Tink encryption keys of the specified type, envelope encrypted using the service’s KMS key, and writes the tink keysets to the specified output directory. This ensures that we both generate the service key material securely in our production environment and safely encrypt a copy of the service key for the service’s KMS key in each region that it is deployed in. It is safe to store the encrypted keys locally on the engineer’s workstation since they are encrypted by a KMS key that only authorized services running production have access to.

These KMS-encrypted tink keysets are stored in git alongside the service’s code and made available at runtime as a Java resource. It may be surprising that we store these in git rather than in the service’s data store or a Kubernetes secret, but we have very intentional reasons for that. These service keys are required to decrypt the service’s sensitive data and we wanted to make them as durable as possible. If they were stored somewhere that supported instantaneous deletion, an accidental or malicious deletion could crypto-shred a massive amount of encrypted data. When we want to intentionally crypto-shred the service’s data, we can delete its KMS key, which has a default 30-day waiting period before permanent key deletion. In addition, the git history gives us a basic audit log of creation, rotation, and deletion of that key.

Threat Model

We designed this scheme to also broadly mitigate the most common threats to cloud-based services. Decrypting sensitive data requires access to three data sources: the service’s data store, the encrypted service key files, and the service’s KMS key. The most common vulnerabilities in cloud services only provide access to at most one of these at a time. In order for an attacker to compromise the encrypted sensitive data, they must successfully exploit vulnerabilities that either trick the service into decrypting the wrong data and returning it to the attacker (e.g. SQL injection) or cause the service to execute the attacker’s code or shell commands (e.g. remote code execution).

In order to demonstrate this in more detail, let’s consider examples of each of the three most common threats to services running in the cloud and how our design defends against them.

Unauthorized access to the service’s data store

One of the most common vulnerabilities in the use of cloud infrastructure is inadvertently exposing data stores such as S3 buckets or ElasticSearch instances. If this were to happen to one of our services using application-layer encryption, an attacker could get access to the ciphertext of the service’s sensitive data. Without access to the decrypted service key, however, they would not be able to decrypt it.

Unauthorized access to the service’s IAM Role

One of the most damaging vulnerabilities in the use of cloud infrastructure is leaked IAM credentials. For example, Server-Side Request Forgery (SSRF) attacks to an AWS EC2 instance’s IMDSv1 metadata endpoint can give the attacker access to cloud resources as the remote service. This would give the attacker access to both the service’s data stores as well as its keys in KMS. In our application-layer encryption design, however, this access would not be sufficient to decrypt sensitive data because the encrypted service keys are not available through AWS APIs using the service’s IAM Role credentials.

Unauthorized access to the service code or deployment artifacts

Another common vulnerability in cloud and container infrastructure is accidentally exposing container registries containing production artifacts or source code repositories containing service source code. We have a well-ingrained engineering culture of not embedding secrets into application code due to having long ago built infrastructure to make doing so unnecessary. In this application-layer encryption design, we do include encrypted service keys in both the service’s source code and deployment artifact. If an attacker were to gain access to either, however, it would not be sufficient to decrypt sensitive data because they would also need access to the service’s production IAM Role to read data from the data store as well as decrypt the service keys using the service’s KMS key.

Lessons Learned

Distributed systems always add challenges and this system was no exception. We knew that we wanted to support service key rotation as a security best practice as well as to support cryptographic expiration of data past its retention period. When rotating service keys for a deployed service with multiple replicas, a naive key rotation can lead to undecipherable data: instances that received a new key will use it for new data, but those that did not will not be able to decrypt it. To solve this, we designed a two-phase rotation protocol. First, we add a new key to the keyset of all services but encrypt data with the old key. Once we can confirm that all services received the new key, we can switch to it for all new data.

Our initial implementation of this functionality used one service key per region and assumed application-layer data replication between regions and that services would re-encrypt data to the other region as it was being replicated. We iterated on this to support replicating data as-is for more flexibility and faster replication by encrypting a global service key to region-specific KMS keys for each region where the service runs. It was a subtle detail that KMS keys are inherently region-specific and that even if you import your own key material into KMS in multiple regions, data encrypted by a KMS key in one region cannot be decrypted by a KMS key created from the same key material in another region.

Another lesson we learned building this was that it forced us to write backfills on encrypted data in code in the production service so that it would have access to the necessary keys in order to decrypt, modify, and then re-encrypt the data. This is a good production engineering practice anyway and we also have custom infrastructure to help with it.

We’ve found that the AWS KMS API, Google’s open-source Tink crypto library, and a small amount of internal infrastructure to make encrypting sensitive service data self-serve, easy, and safe for Cash App service engineers results in more teams encrypting more data, even without the Cash Security team’s direct involvement.

This post also only scratches the surface of the encryption infrastructure that we’ve built and are continuing to build to help make encryption easy for Cash App engineers building services in the cloud. We hope to share more of what we’re built in more posts soon to come!