Making iOS Accessibility Testing Easy

Unit tests are great for testing business logic, snapshot tests make sure your views look correct, and UI tests help to ensure everything fits together properly. How do you test your app’s accessibility though?

Unit tests can test individual properties, but can easily give false positives since an element’s accessibility is affected by its position in the hierarchy.

Similarly, UI tests use the accessibility APIs to navigate screens, but interact with the app in a very different way than real users, so they aren’t a good indicator of whether a screen is actually accessible.

Meanwhile, manual testing is very time consuming and prone to inconsistencies as different members of your team run the tests, not to mention the slight differences between iOS versions and device sizes that multiply the number of test cases you need to run.

Iterating on changes to accessibility can be slow too, since many of the accessibility APIs can be unintuitive for even experienced developers. For example, changing an element’s accessibility properties doesn’t always affect it in the way you’d expect. This leads to more manual testing between iterations, which slows down the whole process.

So what can we do to speed up iterating on accessibility changes and avoid regressing our existing accessibility work?

Introducing AccessibilitySnapshot

Snapshot tests give you a visual representation of your views in your tests. This helps with both catching regressions and speeding up iteration when working on UI. AccessibilitySnapshot brings the power of snapshot testing to accessibility.

Adding an accessibility snapshot test is simple. Once you’ve set up the framework, configure your view and call the SnapshotVerifyAccessibility method.

let view = MyView()

SnapshotVerifyAccessibility(view)

That’s all it takes! The framework will generate an image containing a snapshot of your view with all of the accessibility elements highlighted, along with an ordered list of the descriptions that VoiceOver will read for each of the elements.

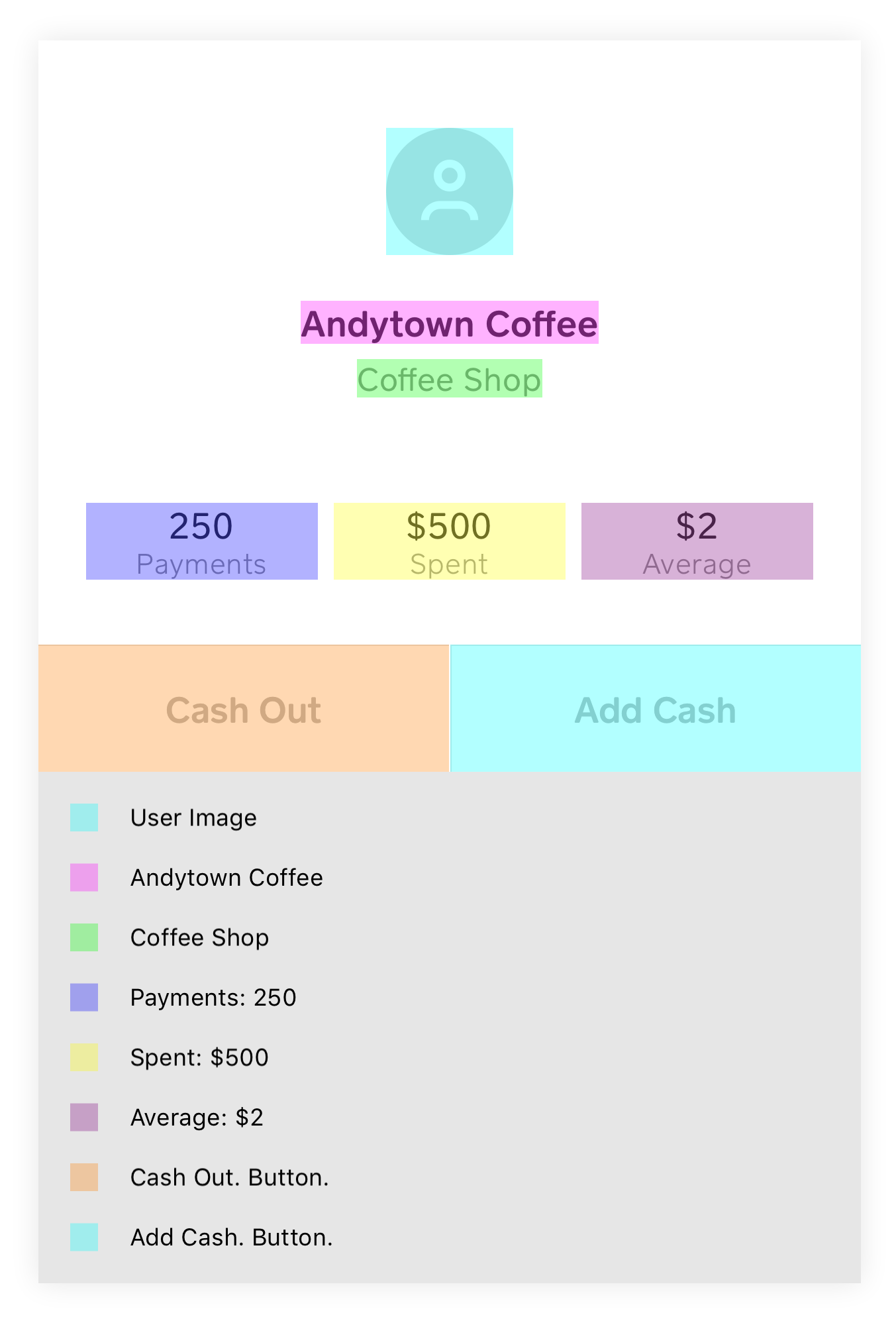

A sample snapshot reference image from a screen in Cash App. The legend below the snapshot shows the description of each element, based on various accessibility properties.

A sample snapshot reference image from a screen in Cash App. The legend below the snapshot shows the description of each element, based on various accessibility properties.

Now if your view’s accessibility regresses, you’ll get a test failure and a snapshot image showing exactly what broke.

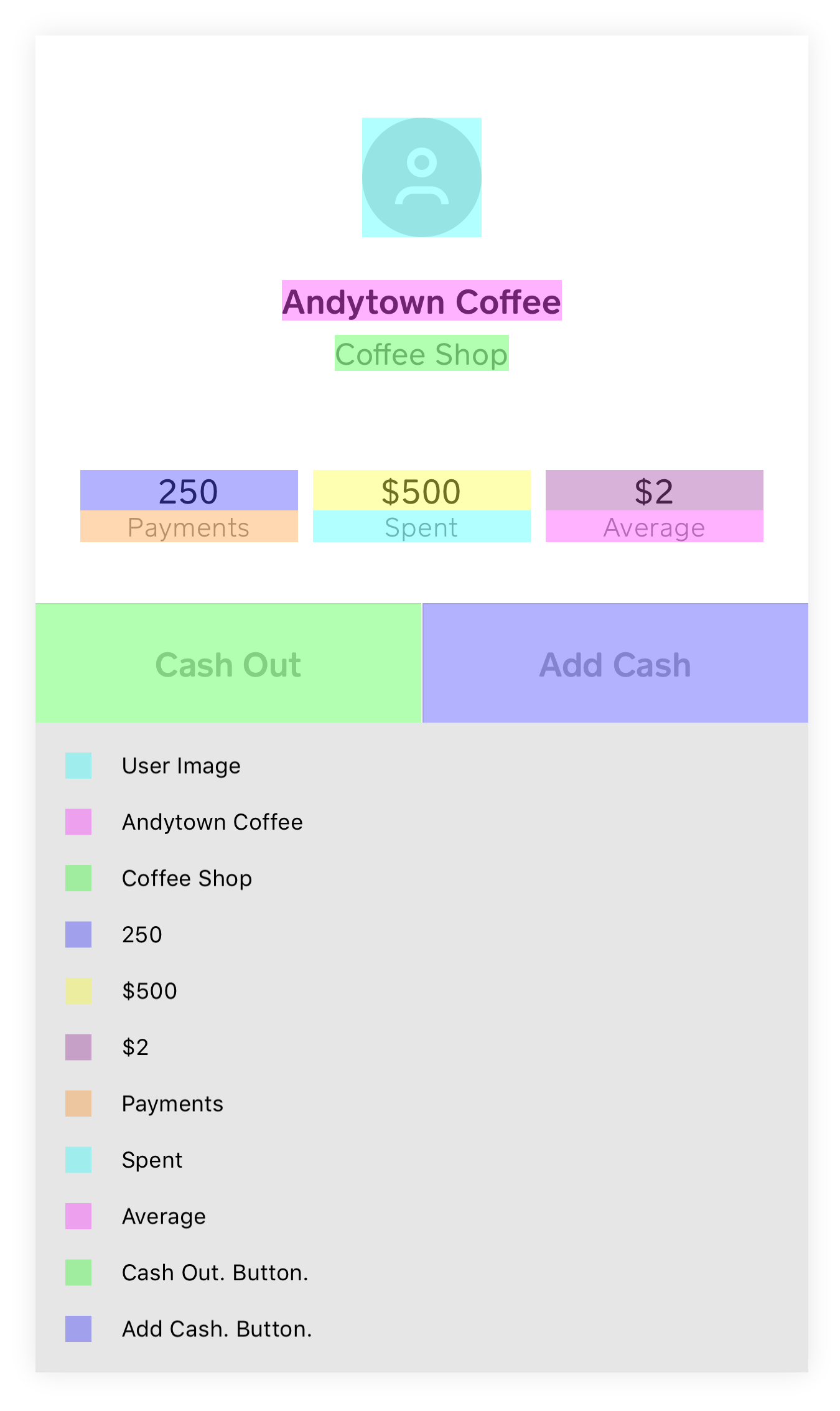

A sample failed snapshot image showing a regression in the grouping of elements.

A sample failed snapshot image showing a regression in the grouping of elements.

Going Beyond VoiceOver

While VoiceOver is often the first feature that comes to mind when thinking about accessibility, there are actually quite a few accessibility features on iOS. AccessibilitySnapshot lays the groundwork for testing a variety of accessibility features. AccessibilitySnapshot already has support for testing the Invert Colors accessibility settings, and support for testing Dynamic Type is in progress.

Getting Started with Snapshotting Accessibility

AccessibilitySnapshot is available on GitHub today. Check out the project for more details on how to get it set up for your app.

Find something it doesn’t support yet? We welcome issues and pull requests.

Happy testing!