Sharding Cash

As we approach the end of 2018, we’re celebrating the anniversary of the first shard split for Cash App with a series of blog posts explaining how we used Vitess to scale out. Rapid growth is hard, and it’s not always a smooth ride. We’re excited to go into depth into many of the things we’ve learnt along the way. There will be tales of major domain model refactorings, distributed deadlocks, dealing with cross shard queries, operations and how we rebuilt the Vitess shard splitting process. Stay tuned.

It’s early Friday morning in Kitchener, outside Toronto in Canada, and I’m just about to kick off the last few procedures on the final shard split for the year. With a bit of luck we will complete the split before we’re hit by the onslaught of Friday peak traffic, Cash App’s most intense day of the week.¹

Almost exactly one year earlier in a conference room in our old Kitchener office: It’s the culmination of one of the hardest things I’ve ever worked on in my career. The other full-time member of the “sharding team”, Mike Pawliszyn, worked overnight to run split clones and diffs for our first shard split and had now overslept his alarm in sheer exhaustion. Jesse Wilson and Alan Paulin, two early members on Cash, are sitting next to me. My finger trembles over the Enter key for possibly one of the most dramatic commands I will execute in my career.

vtctlclient MigrateServedFrom main/- MASTER

This command is the last and final step of a process called a “vertical split”. It migrates master traffic for a specific set of tables from one database to a clone of those tables in a separate database. The clone is being kept up to date by replaying the MySQL transaction log (the “binlogs”). This shard split is to separate tables that we won’t shard from ones that will be sharded even further in the future.

After I run this command we will have completed the first (out of many many) shard splits.

It’s not reversible without very significant data loss.²

I am utterly terrified.

I stand up and pace around the room. Is this really it? Have we thought about everything? What if I missed something? What if I screw everything up?

Cash App’s popularity has continued to grow — seen here at #5 in Apple’s App Store

Cash App’s popularity has continued to grow — seen here at #5 in Apple’s App Store

Let’s back up to late 2016. Cash was growing tremendously and struggled to stay up during peak traffic. We were running through the classic playbook for scaling out: caching, moving out historical data, replica reads, buying expensive hardware. But it wasn’t enough. Each one of these things bought us time, but we were also growing really fast and we needed a solution that would let us scale out infinitely. We had one final item left in the playbook.

We had to shard.

Cash was initially built during a hack week as a simple experiment. It was built on top of a single MySQL database. Over the years the product got traction and the team grew. The codebase grew to over half a million lines of code. This single database grew and grew and grew. It was now time to split it up into many smaller databases. To shard it.

Sharding is a scary thing. Every single one of those lines of code assumed we had a single database. Over the decades, relational databases have perfected the illusion of having the database all to yourself, when in fact, you’re just one of thousands of concurrent users. We call this ACID transactions. When you shard, you split up that single database and break the illusion. If your code isn’t aware, things will go wrong in bad ways. 💥

If you boil it down, there’s essentially two parts to sharding:

- You have to be able to split databases, or what we call a shard split.

- Now that you have many database shards, you have to be able to route queries to the correct one.

We started out designing our own solution to this problem — but as we socialized our design around Square, someone mentioned this thing called Vitess to us. Vitess was built at YouTube to accomplish exactly this. When we started looking at Vitess it was still early days. Documentation was nascent. Features were lacking. But there was something there, and the creator Sugu Sougoumarane was very responsive and helpful. We decided to take some time and see if Vitess could work for us.

Vitess is essentially a distributed database that runs on top of multiple MySQL instances. If you have a single MySQL database, you can slide Vitess in between your app and MySQL and then split up your database while maintaining an illusion that it’s a single database. You speak SQL to Vitess, and Vitess routes that SQL to the correct MySQL shard.

The catch is that the illusion is imperfect. You can have transactions that span multiple shards, but those transactions aren’t fully ACID. Sometimes a query needs to fan out to multiple shards, and such a query has entirely different performance and uptime characteristics to a query that goes to a single shard. Our code was not aware of these imperfections to the illusion.

At Cash App, as with Square, customers are trusting us with their money. We will never compromise data consistency in exchange for availability. Other users of Vitess (like YouTube) can make different trade offs — maybe dropping a comment every once in a while isn’t the end of the world for them. But not us. So the first thing we had to do was change our application code so that it wouldn’t do cross shard transactions in critical money-processing portions of the code. Cross shard transactions can fail partially, and that’s unacceptable to us.

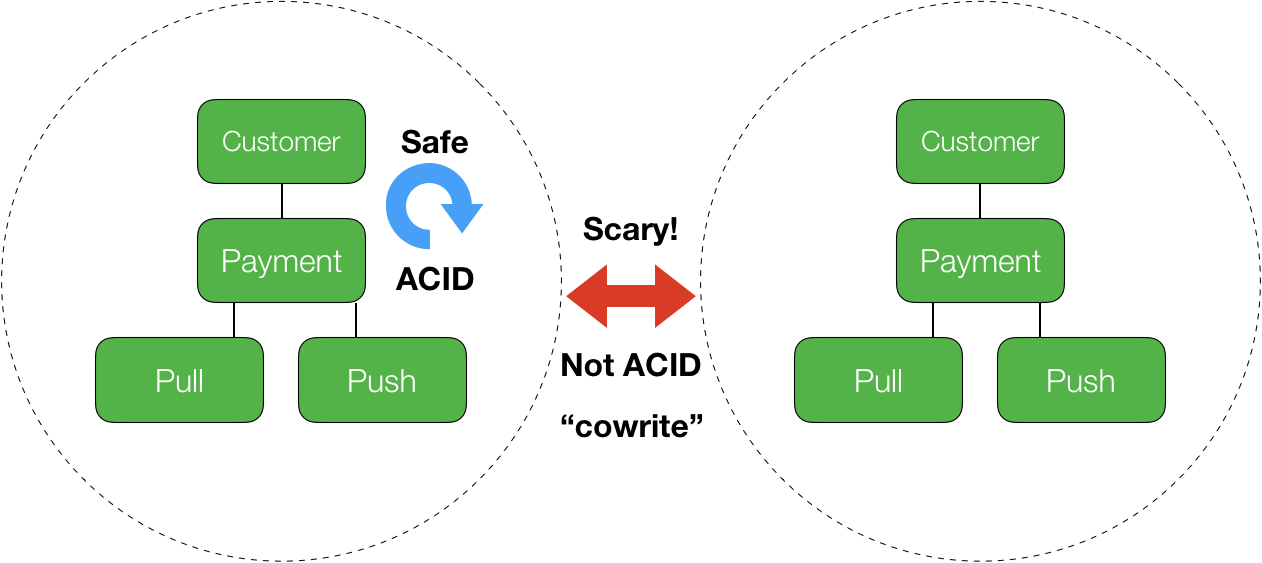

The most important idea we introduced to our codebase was the notion of an entity group. We could configure Vitess in such a way that all the members of an entity group would stay together over shard splits. In short, while you’re inside an entity group everything is safe. The illusion is again perfected. When you span entity groups, however, things might get scary. 👻

In our case, our main entity group was the customer. Transactions inside the data for a single customer is always safe and fully ACID but transactions that span multiple customers (we called them “cowrites”) were not, and we had to take precautions to maintain integrity.

This was a major change to our code — one that Jesse Wilson is going to talk all about in an upcoming post in this series.

One year later, and we’re back at the office that cold November morning. I’ve been pacing around the office nervously for a while now. Alan and Jesse tell me it will be fine. We won’t be able to complete the shard split if we get too far into the day’s peak traffic. It’s time…

I sit down and hit the enter key.

We all stare intently at various monitoring screens.

“Query traffic is dropping off the main shard.”

“Traffic is now on the new shard.”

There is a single page from our alerting system and errors quickly drop off. We had taken less than a second of downtime.

It worked.

We’re celebrating the anniversary of the first shard split for Cash app with a series of posts talking about how we used Vitess to scale.

Coming up next in our Vitess series:

- Remodeling Payments

- Distributed Deadlocks

- Cross-Shard Queries & Lookup Tables

- Shard Splits With Consistent Snapshots

- Operating Vitess

Stay tuned!

¹ We did complete the split before peak traffic!

² Master traffic migrations have recently been made reversible so this is no longer quite as anxiety inducing as it used to be.