Implementing Kafka in the Payments PCI World

Summary

This article articulates the challenges, innovation and success of the Kafka implementation in Afterpay’s Global Payments Platform in the PCI zone. To satisfy the PCI DSS requirements, we decided to use AWS PrivateLink together with custom Kafka client libraries (producer \& consumer) to form the solutions for the Payments Platform.

Context

The asynchronous processing capability that Kafka offers opens up numerous innovation opportunities to interact with other services. In addition, it helps decouple platform features from the mission-critical payments flows.

For example, we have scheduled Quartz jobs that make changes to our data, such as payment tokens. The data is spread across other systems within Afterpay. If Payments Platform does not pass a signal to other systems, there could be data inconsistencies. For this notification purpose, a synchronous API call wouldn’t make sense. Instead a message queue, with opt-in subscription models, would be ideal. This pattern also enables us to move away from the dependency on the binlog replication for sending data to the data warehouse.

What are PCI-DSS challenges?

PCI-DSS is a set of requirements to ensure any company or seller that handles cardholder data is able to safely and securely accept, store, process and transmit cardholder data during card transactions. Afterpay’s Global Payments Platform is PCI-DSS certified, which means for any technical implementation, we need to satisfy the following requirements:

- Stricter ingress and egress networking control

- Strong infrastructure isolation

- Data integrity and security

- Secure cardholder data handling

Due to the lack of overlapping VPC CIDR support in AWS, and the shared networking infrastructure with other teams in Afterpay, it was difficult to establish strict ingress \& egress control and would therefore make payments infrastructure more exposed to other teams who don’t have a need to get visibility or access to the cardholder data. That eliminated the AWS Transit Gateway option for networking.

With specific sensitive data handling and data integrity requirements, the Kafka standard libraries couldn’t be used out-of-the-box as we needed to customise them.

How did we solve it?

In collaboration with our fantastic Platform Engineering team, we established dedicated AWS PrivateLinks between Payments Platform’s PCI infrastructure and Platform Engineering’s Kafka infrastructure to make sure ingress and egress are locked down to only allowed applications and PCI infrastructure is not exposed to the outside world within Afterpay.

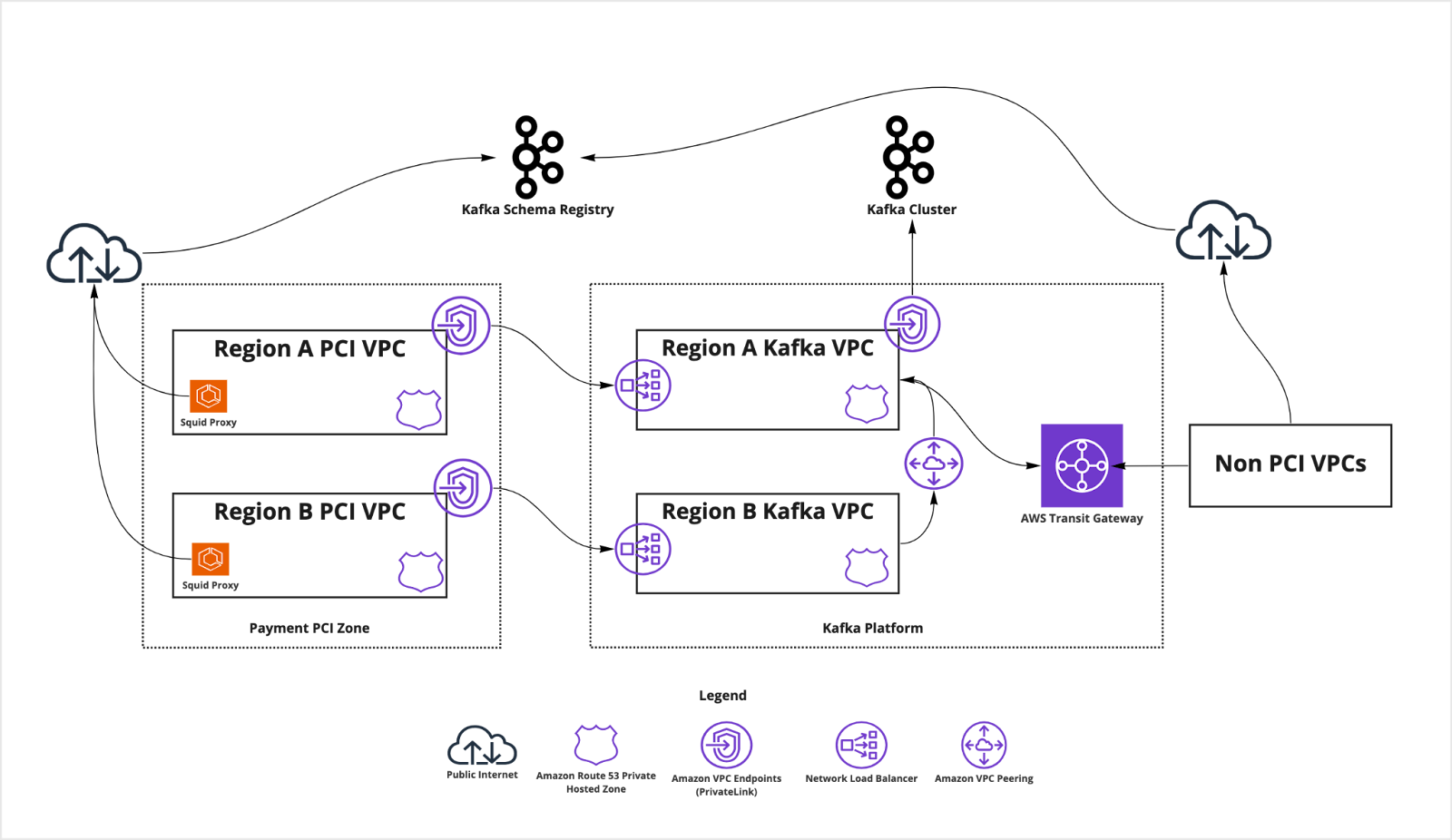

Note: this is a simplified diagram for illustrative purposes only

From the above diagram, we can see that Payments PCI VPCs are connected to Kafka clusters via two PrivateLink jumps - PCI to Platform, and Platform to Kafka Cluster, while other teams are all going through the AWS Transit Gateway to the Kafka cluster with one PrivateLink jump. To ensure Kafka brokers can communicate with each other and resolve DNS names, an extra AWS Route53 private hosted zone was created within the PCI VPC to match the private hosted zone in Platform’s proxy VPC. The private hosted zone setup is critical, as it ensures the Kafka DNS resolution can work all the way through to the Kafka cluster, via two PrivateLink connections.

For Kafka Schema Registry access, which is HTTP call, we use Squid Proxy to explicitly whitelist its DNS name to maintain rigid egress traffic control.

Additionally, we built our own custom Kafka client libraries, producer and consumer, to have the following extra capabilities:

Card number detection and obfuscation during Avro serialisation to ensure sensitive information does not leak out of the PCI zone

To ensure sensitive data is never published to Kafka, extra care was taken in the custom producer library. While the raw message is being serialised into the Avro format, each field will go through a process to detect if there is any potential card number present. The detection uses RegEx with future plans to leverage the Luhn algorithm to reduce false positives. We customised the Kafka Avro Serializer to inject the card number obfuscator.

Built-in thread pool with a queue to make event publication truly non-blocking to ensure low latency

Although Kafka event publication is mostly asynchronous, there are still synchronous steps, e.g. waiting on metadata update and establishing the first connection with the Kafka cluster. To prevent Kafka publication from blocking the mission-critical flows, the custom producer library has a built-in thread pool with a queue to dispatch the entire Kafka publishing process asynchronously. This way, Kafka event publication becomes silent and is decoupled from the main payments flow to not interfere with or introduce extra latency to the customer experience.

HMAC verification to ensure Kafka event message integrity

Whenever applications in the PCI zone consume messages, they need to make sure that the message hasn’t been tampered with. With Platform Engineering’s support, Kafka producers are able to use an AWS KMS key to sign the message, and the consumers in the PCI zone can use the same key to verify that signature, making sure the integrity of the message is intact.

Where we are now and what next

We have deployed the infrastructure to all payments environments ready for any payments applications to hop on the event train. Millions of messages have been published since the initial rollout with more topics to come.

With the infrastructure and foundation work in place, the team has many plans to leverage Kafka and also to improve the functionalities and reliability. For instance, the team is building an at-least-once delivery model leveraging the performance and scalability of AWS DynamoDB with DynamoDB Streams as an intermediate persistence layer, instead of Debezium.

Addendum

It has been a fun ride working on this project to improve Afterpay’s Global Payments Platform and it’s also a great opportunity for engineers to learn and grow. Here are a few learnings that I would like to share:

- Kafka is a great tool for distributed storage and asynchronous data processing, but extra care is needed to make sure it complements, rather than interferes, the main application flow; and by no means, it’s supposed to replace existing databases.

- Both AWS Transit Gateway and AWS PrivateLink are fantastic networking options, but in our case, the unidirectional connectivity of AWS PrivateLink makes strict security requirements easier to manage.

We pride ourselves in applying rigorous engineering standards and principles and using innovative problem-solving approaches. We, in the Payments Platform Engineering team, are also in a unique position because we manage our own infrastructure and this means that you get to work on interesting problems in both infrastructure architecture and application development - best of both worlds! We are always looking for awesome talent to join us.