How We Sped Up Zipline Hot Reload

Zipline is a library Cash App has developed for fetching and executing code on demand in a mobile app. Developers write their code in Kotlin and it gets compiled to JS, hosted on a server or CDN, and executed in the QuickJS engine in the app. This Droidcon presentation explains how it all works in more detail.

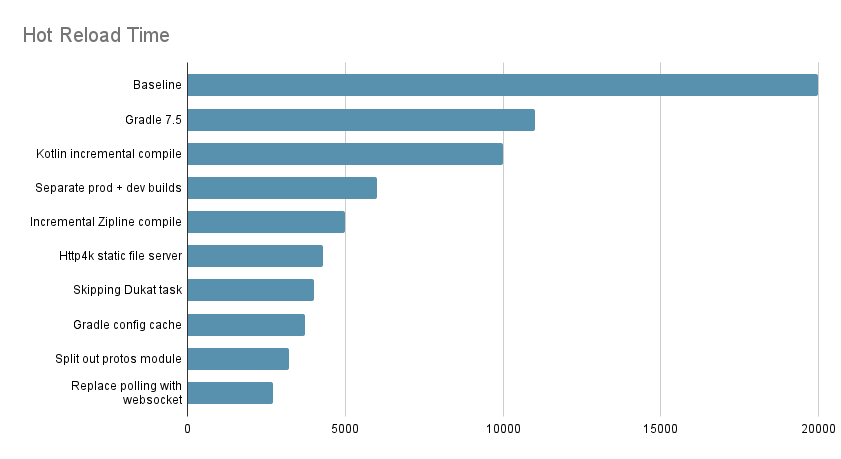

One cool thing about Zipline is its support for hot reload: when developing locally, as soon as you make a change to the code, it is recompiled, served by a local server, and the app picks up the change. However, I noticed that it was taking 15-20 seconds for the hot reload to take effect, far longer than I expected.

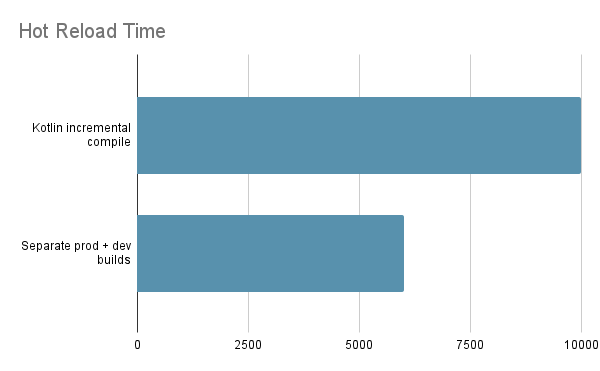

I talked with Jake Wharton, one of the developers of Zipline, and he pointed out that my project was using Gradle 7.4, which had a slow implementation of file watching. Luckily this had been fixed in Gradle 7.5. After upgrading I saw the hot reload time drop to 11 seconds!

Jake also suggested turning on incremental Kotlin/JS compilation which knocked off another second, bringing the total time down to 10 seconds.

This speedup was incredible! I was hooked. There’s a huge developer experience difference between 20 and 10 seconds, but 5 seconds felt like a sweet spot that wouldn’t interrupt my workflow too much. I decided to see if there were other improvements that we could make.

Prod and Dev Builds

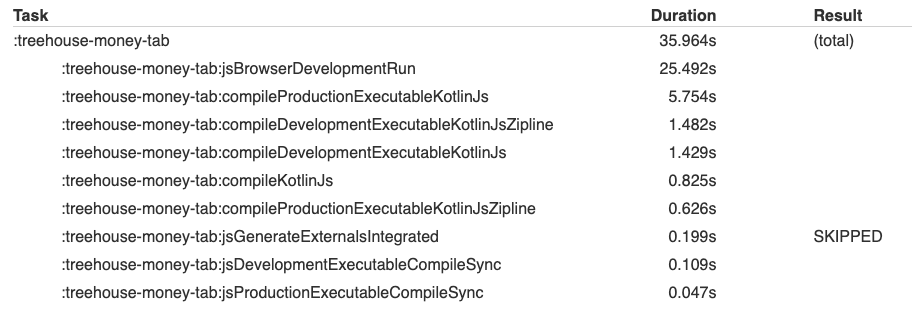

The first thing I did was analyze the Gradle build. Gradle has a handy built-in profiler:

gradle jsBrowserDevelopmentRun --profile

Immediately I noticed something strange: there were both development and production tasks running.

What’s going on here?

The Kotlin/JS Gradle plugin compiles Kotlin→JS and then serves it locally via Webpack. Our apps use the QuickJS optimized bytecode instead of the raw JS, so we need another step to go from JS→QuickJS bytecode. This is accomplished by making the Zipline compile task a dependency of the Webpack task:

project.tasks.withType(KotlinWebpack::class.java).configureEach { kotlinWebpack -> kotlinWebpack.dependsOn(compileZiplineTaskName) }

The problem is that there are actually two Webpack tasks: one for development, and one for production. This snippet indiscriminately adds the Zipline task to both, which means that whether we run the development or production Webpack task we end up having to run both prod and dev Zipline tasks (which in turn run both prod and dev Kotlin→JS tasks). Pretty wasteful! Changing the code so that we only run the correct development or production task shaved off around 4 seconds from the build. Now hot reloads were taking under 6 seconds.

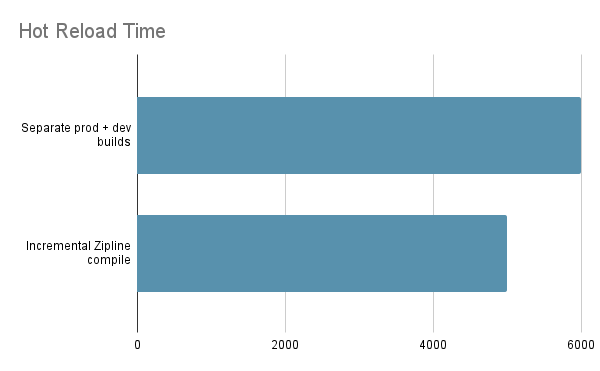

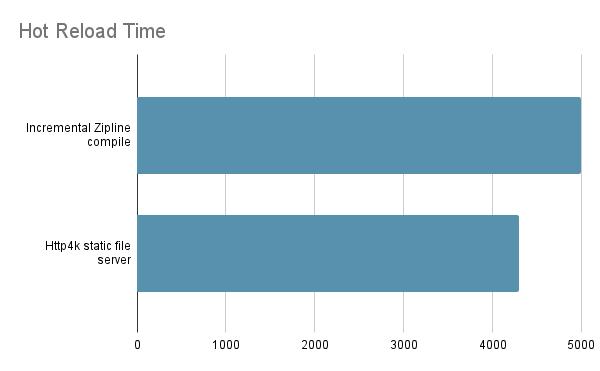

Compiling Zipline Incrementally

The Zipline compile task was taking ~1.5 seconds. That seemed a bit high to compile the single file that I was changing. After poking around a bit, I discovered that Zipline was recompiling all modules on any code change, instead of just the module that I changed.

Adding incremental compile support to the Zipline Gradle Plugin sped up the build by another second or so.

This brought hot reload times down to 5 seconds.

Using Our Own Static File Server

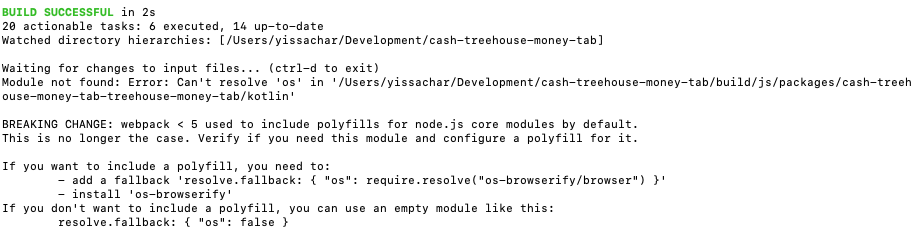

While working on the changes above I’d noticed some weird Gradle build output:

I suspected Webpack was doing extra work that we didn’t need. All we want is a very basic static file server to host the compiled Zipline files. I found http4k which is about as simple a server as you can get; the core library has no external dependencies. After hooking it up to the Zipline Gradle Plugin and replacing Webpack we saw a 700ms speedup (and, as a bonus, removed the Webpack warnings).

Hot reloads were now down to ~4.3 seconds

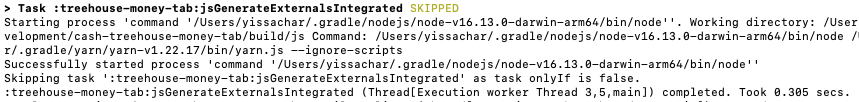

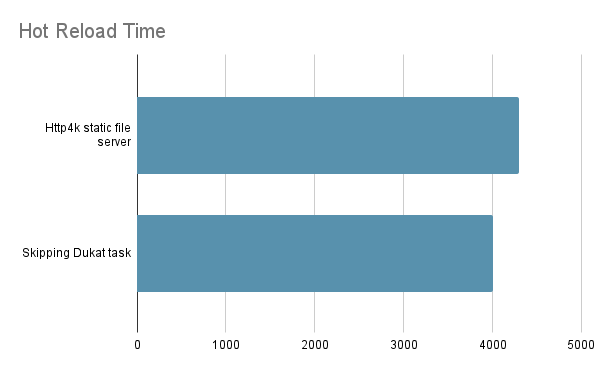

“Skipped” Tasks

Looking over the Gradle build, another task caught my eye:

Supposedly this jsGenerateExternalsIntegrated task was being skipped, but it was launching a Node process and taking 300ms. This task is related to Dukat, a tool to convert TypeScript declarations to Kotlin declarations. Since we aren’t using TypeScript in our project we can manually exclude the task from running:

tasks.withType(DukatTask::class) { enabled = false }

After doing this, hot reloads were now taking ~4 seconds.

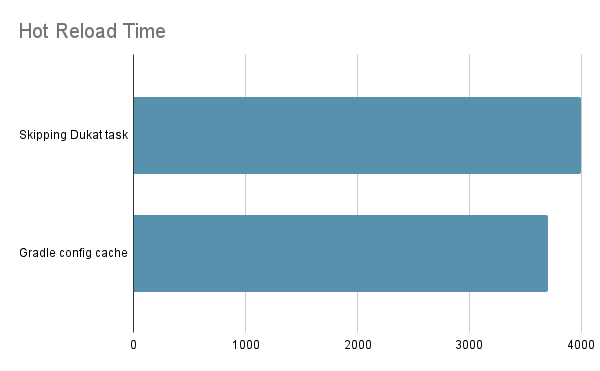

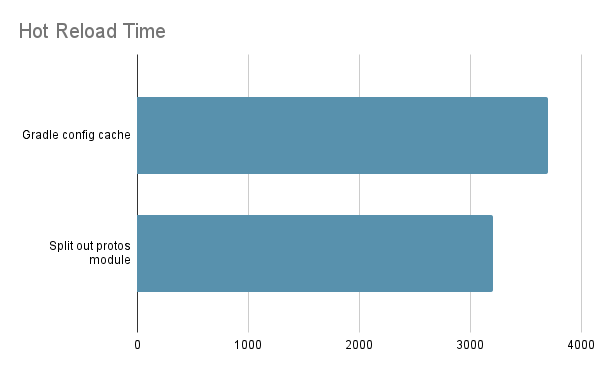

Config Cache

The Gradle config cache re-uses calculated configurations when build scripts haven’t changed. Turning it on reduced build time by ~300ms:

org.gradle.unsafe.configuration-cache=true

At this point builds were taking ~3.7 seconds.

Splitting Out Protos

After intense scrutiny over the Gradle build times, I couldn’t see any more obvious opportunities for speedups. But Gradle build time is only part of the story for hot reload times - we also have to consider the on-device time to download and parse the Zipline files and load them into QuickJS.

Measuring on-device load time showed something surprising: It was taking ~500ms for QuickJS to load a single Zipline module that our app depended on (in comparison, our own app module only took a few milliseconds to load). This dependency was a giant module of proto definitions, but we only actually need a few of them. Removing the dependency and adding explicit dependencies for the protos that we used eliminated the 500ms module load time.

Now the hot reload time was down to ~3.2 seconds.

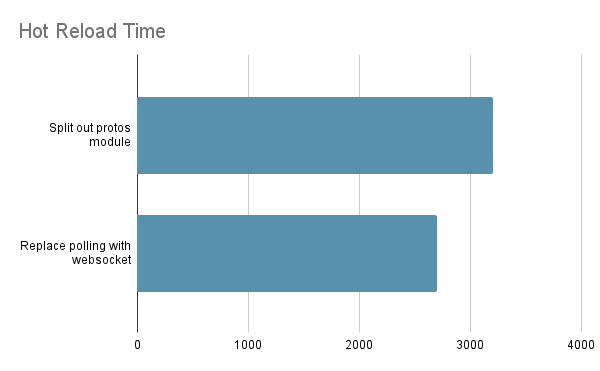

Using Websockets Instead of Polling

In order for the app to know that a new version of the code is available, it polls the hot reload server every 500ms to check for changes. I realized that if we instead used a websocket, we wouldn’t have to wait for the next poll to load the changes.

I added websocket support to the hot reload server and set it up to send a message to all open connections as soon as new code was available. On the app side, we connect to the websocket if available, but fall back to 500ms polling if not, to ensure that hot reloading always works.

After setting up the websocket, hot reloads were now down to ~2.7 seconds.

Can We Make It Even Faster?

Under 3 seconds is pretty fast, but how far can we go? Here’s the breakdown of what’s taking time currently:

| Action | Time | Description |

|---|---|---|

| Kotlin compile time | 1.8s | A Kotlin/JS Hello World app takes ~1s to compile, so this seems pretty reasonable given all the extra code and dependencies |

| Fetch and load app manifest | 20ms | |

| Fetch all modules over HTTP | 60ms | This and the next couple of items will be app dependant |

| Compare sha256 of modules | 30ms | |

| QuickJS module loading | 360ms | App specific dependencies, as well as common modules like the Kotlin standard library |

| Running the Zipline app | 30ms | |

| Misc | 30ms | Unmeasured time - things like actual UI rendering, how long it takes to send and receive the websocket message, etc. |

| Measurement latency | 250ms | I’m manually using a stopwatch to time load times, so this is my reaction time delay |

There’s still a few micro-optimizations available: we could load unchanged modules from the local disk cache instead of fetching over the network, or we can try to minimize our app module dependencies further. Ultimately though, we’re bottlenecked by the Kotlin/JS compile time at this point. Without improvements to the Kotlin compiler, we’re unlikely to get hot reload speeds below 2 seconds - but that’s fine, under 3 seconds is still amazing and better than I thought was possible when starting this project.

Here’s how all the improvements look, stacked together:

The Zipline improvements have all been merged upstream and will benefit all users of the library. The project specific changes have been documented so that all projects can achieve the same speedups that we did.

Closing Notes

I spent quite a bit of time on this project, but it was worth it! Getting Zipline hot reload times to under 3 seconds improves the developer experience and helps us iterate faster. Additionally, I had a ton of fun learning about how Zipline works.

Projects like Zipline often feel like magic to me initially. But once I dig into it I can see that, at the end of the day, it’s just code: code that I can understand, code that I can play with, and code that I can make better.